serverless需要有一套广泛兼容多种事件的时间触发框架,从而支持在不同应用场景下基于事件来创建server,对外提供服务。knative的eventing的目标就是提供这样一套框架。当前knative原生支持的event类型还比较有限,但是系统提供了开放的container source,可以基于需求自定义实现。本篇的示例主要基于系统sample文档的in-memory-channel来论述其实现原理。

概念理解

由于整个knative都是建立在k8s之上的,knative的实现完全基于k8s原生的编程框架。所以,要理解eventing的整个原理,我们先来分别了解其定义的CRD资源,以及它们之间的联动关系。

CRD资源

通过在k8s上过滤eventing的crd资源,可以看到knative v0.7.0提供了一下的资源定义。他们都有什么作用,以及彼此之间存在何种依赖关系?接下来将做分析。

1 | ~ kc get crd | grep eventing |

可以按照功能,将其分为两大类:

source相关

抽象事件类型,适用于每一种source

eventtypes.eventing.knative.dev

系统支持的三种source

apiserversources.sources.eventing.knative.dev

containersources.sources.eventing.knative.dev

cronjobsources.sources.eventing.knative.devbroker-trigger相关

顶层抽象

brokers.eventing.knative.dev

triggers.eventing.knative.dev

逻辑层

channels.eventing.knative.dev

subscriptions.eventing.knative.dev

物理实现

clusterchannelprovisioners.eventing.knative.dev

关联关系

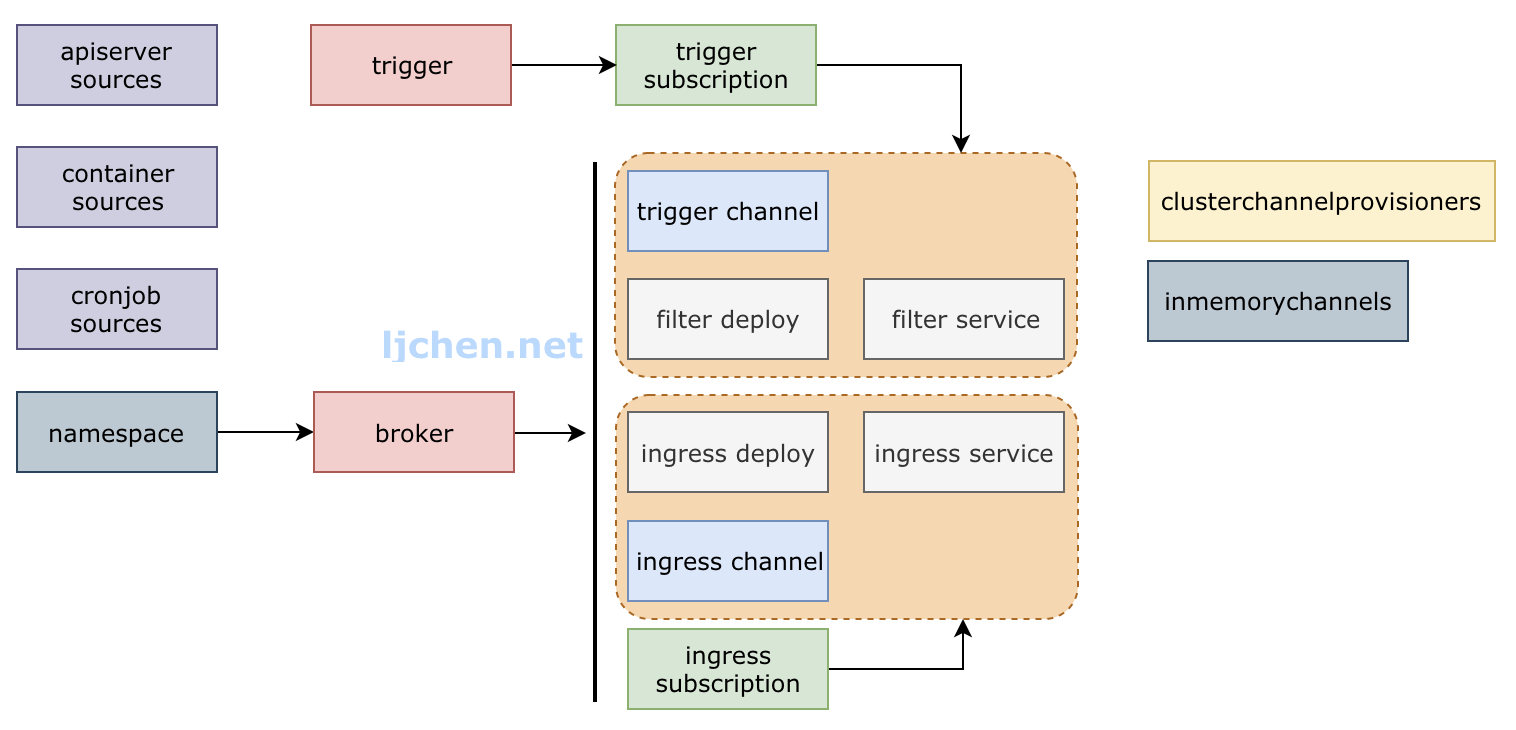

上图列出了所有的CRD,他们的作用和关联关系如下:

- 三个source CRD创建之后,其controller会主动部署对应的deployment; deployment实现了从producer收集event,并转发到下一跳的逻辑,相当于event进入knative-eventing系统的入口;

- 当创建namespace的时候,如果指定了label

knative-eventing-injection=enabled,knative会在该namespace自动创建default broker; - broker和trigger抽象了event转发的逻辑,为了实现broker,controller会分别创建该broker的ingress-channel和trigger-channel以及用于租户业务namespace与eventing system namespace之间event转发的deployment和service;

- trigger是依赖于broker的,如果trigger不指定broker,会自动使用default broker,trigger中明确定义了subscription;

- subscription在这里涉及到两部分,一部分是用户创建trigger时指定的业务相关的订阅和SINK;另一部分是系统内置的,trigger channel在fanout了消息之后,需要reply通道,ingress-channel和ingress-subscription就是为了实现该通道的转发逻辑;

- clusterchannelprovisioners在当前版本中还保留,但是后续会被废弃掉,转而采用各个provisioner对应的CRD。这里可以理解为channel之下对应的物理实现,通过解析channel中的subscription信息来fanout事件。

broker-trigger实例

按照官网的步骤来部署一个ApiServerSource类型的source采集k8s的event,并经过一些列的channel之后,最终达到ksvc来展示出来。具体步骤详见这里。忽略一些非关键的步骤,我们来重点看看几个核心概念都是如何定义的,以及他们之间的关联关系。

consumer

先准备好最终展示所接收event的ksvc,该资源受autoscale的控制,在没有请求的情况下,pod实例数会自动缩容到0值。查看yaml配置文件的最后一段是knative service containers的定义,这里的镜像的功能是显示接收到的event信息 (为了墙内拉取方便,镜像已经被替换地址)。

1 | apiVersion: serving.knative.dev/v1alpha1 |

部署好之后,可以看到对应的pod和service信息如下:

1 | ~ kc get deploy,svc,pod |

source

首先是event的来源,这里由于是ApiServerSource,只需直接指定对应的配置文件。

1 | apiVersion: sources.eventing.knative.dev/v1alpha1 |

注意,这里配置的sink指定了使用default broker。当该yaml被应用到k8s之后,在对应的namespace下可以看到创建了一个deployment。

1 | # apiserversource |

如果查看service,会发现找不到该deploy对应的service。原因是其只watch k8s api-server,然后将k8s event信息收集到之后发送到对应的channel;因此,该服务并不对外提供其他服务。另外,该服务貌似并没有sidecar,我们知道对于knative的service都是有sidecar的,为啥呢?还是同样的原因,这是一个系统服务,不是业务的ksvc。

broker

default broker是自动创建的,创建的时候需要为namespace配置对应的label(如果不配置的话,会导致broker无法被自动创建,整个event链路不通),具体如下:

1 | kubectl label namespace default knative-eventing-injection=enabled |

在执行完命令之后,最直观的是可以查看到已经创建的broker default(通过查看该broker的status可以看到address,即外部的访问地址);在default namespace下可以看到,已经自动部署了两个deployment。但是如果我们通过查看knative的eventing CRD可以发现更多的资源被创建了出来。

1 | # broker |

trigger

下面是trigger的配置:

1 | apiVersion: eventing.knative.dev/v1alpha1 |

在subscriber.ref可以看到,其将event内容发送到了event-display这一个knative service。

1 | # trigger |

数据平面

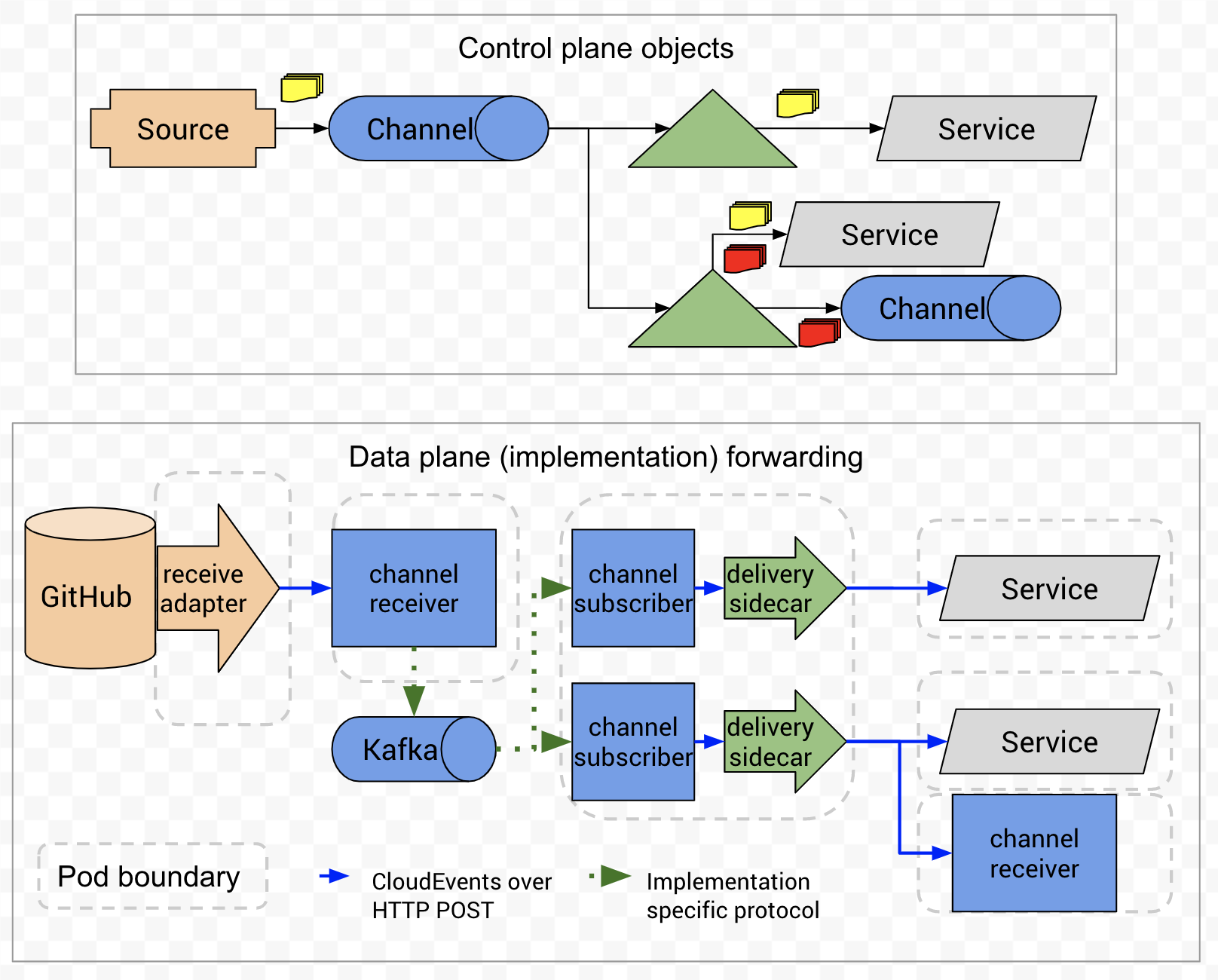

前面是整个创建流程,以及对应的资源属性,接下来分析一下event转发的数据面流程,先来看官方的一张控制面与转发面的图。

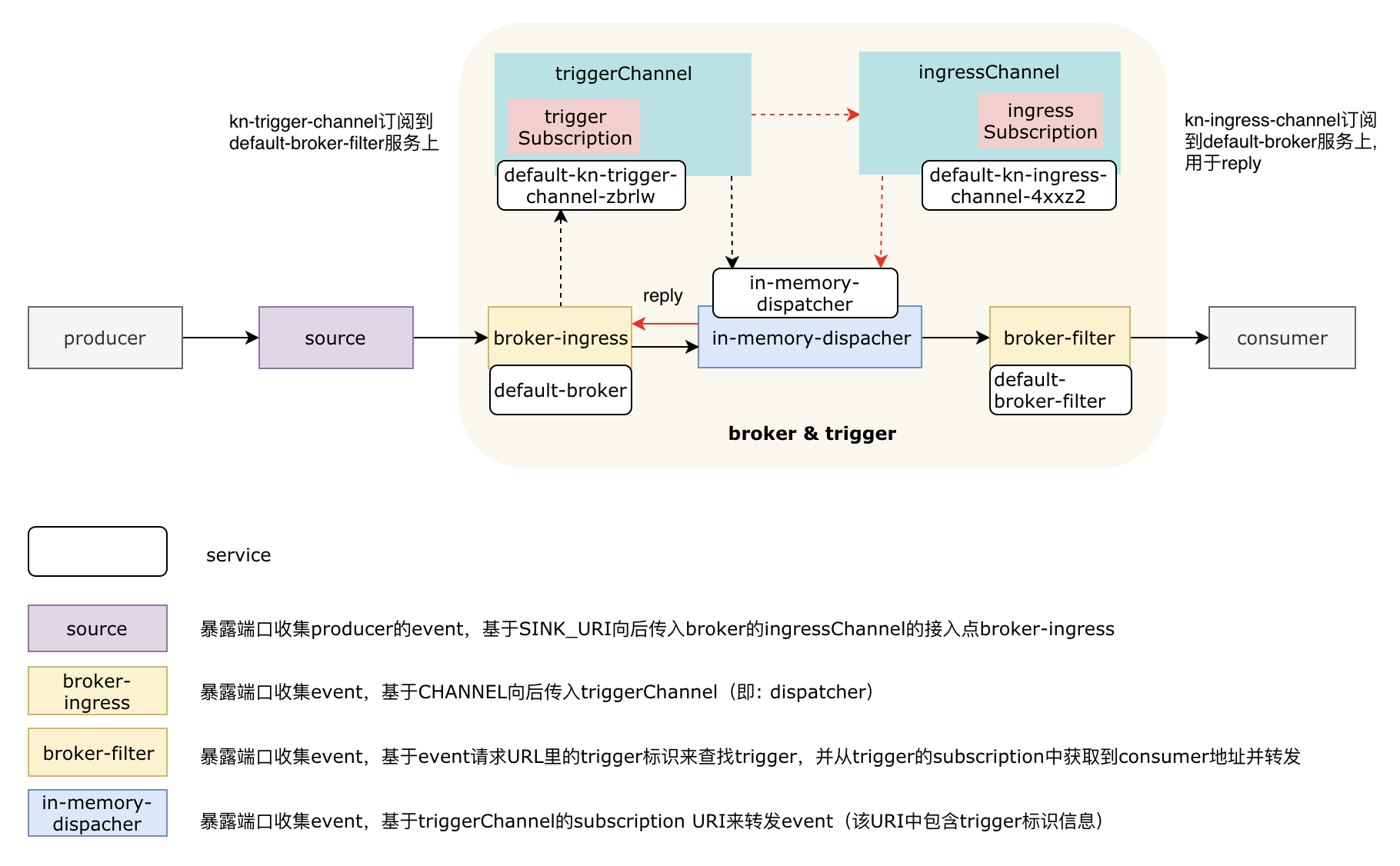

由于该图较老,只体现了channel与scription这一层的概念,且数据面较抽象。我重新基于broker&trigger以及in-memory-channel整理了该图,具体如下。

其中实现部分为真正event的转发流程,broker-ingress、broker-filter与上面的channel之间通过虚线连接的部分是逻辑链路。黑色的连线为event转发,红色为反向的reply链路。in-memory-dispatcher是imc的底层实现,如果是使用kafka就应该替换为kafaka deployment。这里先不做详细描述,具体见下文逐步分析。

source源

前面讲到,当我们将apiserversource配置下发后,controller会在namespace下部署出一个deployment,该deployment的作用是收集k8s的evnet并发送到指定的SINK。由于我们指定的是default broker,经controller处理之后,其参数变为了default-broker这个service的访问地址(即,broker的总入口);具体见下面操作中对应的注释信息。

1 | ~ kc get deployment.extensions/apiserversource-testevents-57knn -o yaml |

当event接收到后,直接转发到SINK_URL指定的default-broker地址。自此,source部分的工作已经完结,接下来在看看default-broker。

broker & triger

broker在逻辑层面包含ingress-channel和trigger-channel,对应在数据面位于eventing的system namespace下有对应的dispatcher。同时,位于业务的namespace中会有broker-ingress和broker-filter两个deployment用来负责dispatcher与业务层之间event的转发。

broker-ingress

既然已经知道流量是转发给default-broker这个service的,直接查看该service以及对应的endpoint的地址,确定其对应pod和deployment。

1 | # service, endpoint |

根据endpoint的IP地址,查找对应pod,deployment。

1 | # pod |

该deployment的配置信息如下,这里面关键信息已经在注释中标明。

1 | ~ kc get deploy default-broker-ingress -o yaml |

dispatcher

自此,我们似乎再也没有线索,不知道event到了in-memory-dispatcher之后是如何转发的。但是通过查看dispatcher代码了解到,该deployment转发event的逻辑是基于channel的subscription配置信息。由于当前对应为triggerChannel,通过查询其channel default-kn-trigger可知:

1 | # channel description "default-kn-trigger" |

报文会被dispacher fanout到subscriber URI,也就是default-broker-filter这个服务。

broker-filter

1 | ~ kc get svc default-broker-filter |

到这里似乎又卡壳了,因为通过查看default-broker-filter的配置,得不到任何有关其下一跳的信息。通过分析代码,发现代码中通过报文URI中的路径信息(http://default-broker-filter.default.svc.cluster.local/triggers/default/testevents-trigger/99849cd0-a05a-11e9-84ef-525400ff729a)来获取到trigger名称(default/testevents-trigger),然后再提取trigger的subscriber来定位到下一跳,最终将event发送给knative-service即event-display。

1 | apiVersion: eventing.knative.dev/v1alpha1 |